Smarter, More Reliable AI Apps: How Oblix Powers Adaptive LLM Execution

⚡ Smarter, More Reliable AI Apps: How Oblix Powers Adaptive LLM Execution

In the race to bring LLM-powered experiences into products, many companies focus on performance, latency, and cost — but often overlook something equally critical:

➡️ Reliability.

If your app fails to respond quickly or consistently, users won't care how smart your model is — they'll abandon it. Slow = broken. Down = dead.

Whether you're building AI copilots, chatbots, or internal assistants, delivering a smooth, always-on experience is essential.

That's where Oblix steps in — not just as an orchestrator of cloud and edge LLMs, but as an intelligent reliability layer that adapts to the real world.

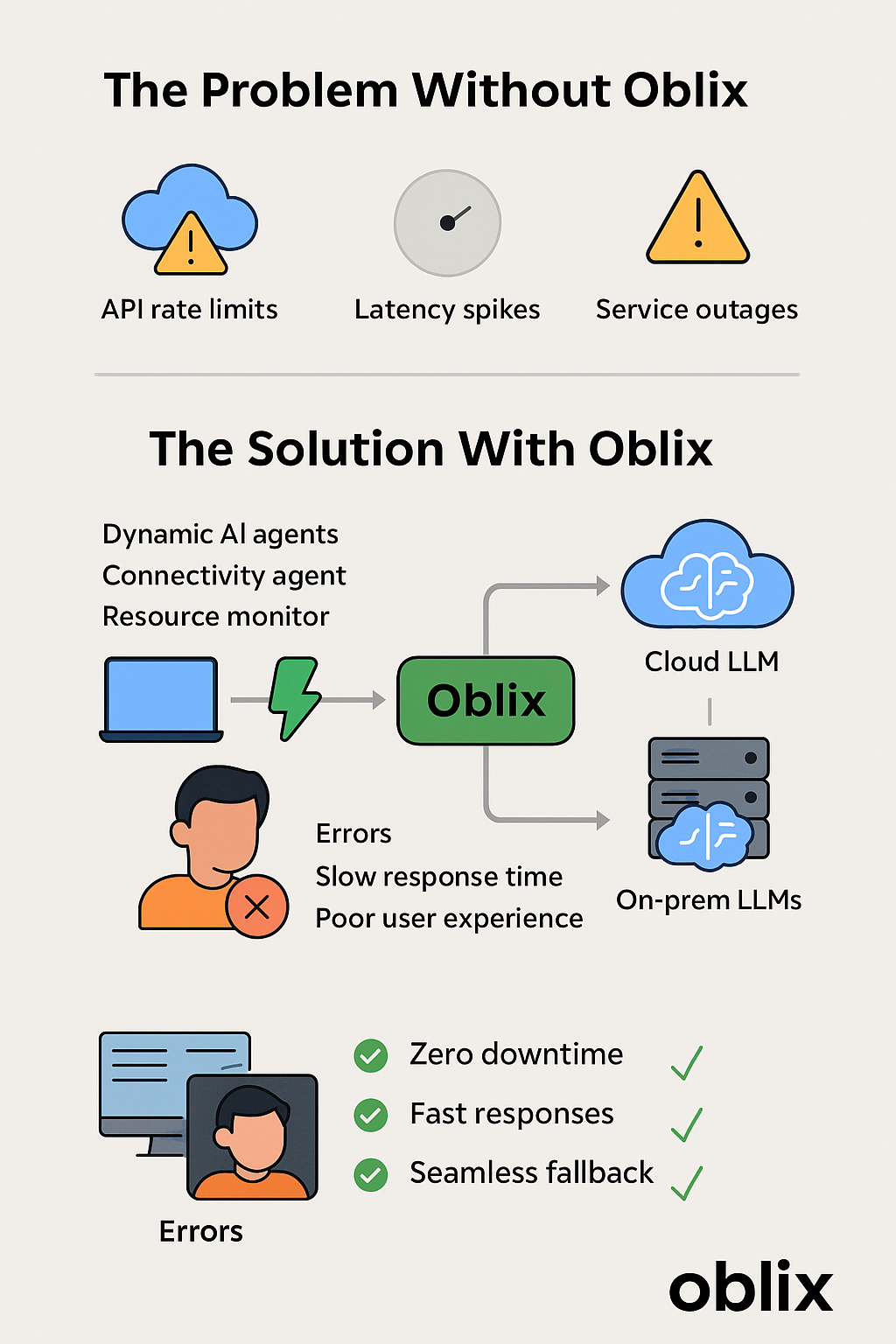

💥 The Reality of AI Apps Today

Let's say you've built a tool that uses OpenAI's GPT-4 to answer customer questions.

What happens when:

- Your user has spotty Wi-Fi at a coffee shop?

- OpenAI's API is rate-limited or degraded?

- The prompt takes longer than expected and times out?

💥 User sees a spinner, a delay, or an error. 💥 Support tickets go up. 💥 Trust goes down.

And if you try to fix it, you're adding brittle fallbacks, complicated retry logic, or duplicating model endpoints — which takes time, effort, and introduces new points of failure.

🧠 Enter Oblix: Intelligent, Adaptive LLM Routing

Oblix was built with reliability in mind — not just performance or cost. Our agents monitor your environment in real time and automatically choose the best path for each prompt.

🔍 What our agents do:

Connectivity Agent: Detects network quality, latency, outages, or switching between Wi-Fi and cellular.

Resource Monitor: Checks CPU, GPU, battery, and other on-device metrics to assess whether a local model can be run efficiently.

Decision Engine: Uses these signals to determine where to execute each prompt:

- ☁️ Use cloud when it's fast, available, and justified

- 💻 Fall back to local models when the cloud is slow or down

- 🔁 Retry intelligently or switch routes without user intervention

🎯 Real-World Example: Internal AI Copilot

Imagine your company's employees use an AI assistant to:

- Write internal docs

- Draft emails

- Pull product info from internal docs

- Summarize meeting notes

Sometimes they're in the office on a fast connection. Other times, they're on a train, plane, or weak hotel Wi-Fi. They don't want to wait — or think about routing.

With Oblix, they don't have to.

It routes prompts smartly:

- Uses your local, fine-tuned LLaMA model if the network is poor

- Uses OpenAI or Anthropic when available and optimal

- Fallback is seamless, invisible, and policy-driven

To the end user, the assistant just works — fast, reliable, and friction-free.

📈 The Business Win

By improving prompt routing through context-aware agents, Oblix delivers:

- ✅ Improved user satisfaction

- ✅ Reduced latency-related abandonment

- ✅ Fewer failed queries or support complaints

- ✅ No need to manually manage complex fallback infrastructure

- ✅ Better SLA adherence in enterprise settings

Put simply: Oblix keeps your AI app available and adaptive.

🔧 Getting Started

We currently support:

- Local models via Ollama, vLLM, HuggingFace

- Cloud APIs like OpenAI, Anthropic, Cohere

- Pluggable policy hooks for compliance, failover, and cost constraints

If you're building a production AI app and care about:

- Reliability

- Resilience

- Seamless experience

Let's talk.

👉 oblix.ai

Let your AI app adapt to the world — not crash when it changes.

Join Our Community!

Have questions about implementing a hybrid LLM strategy in your organization? Want to connect with other developers using Oblix? Join our thriving Discord community where you can get help, share your projects, and collaborate with the Oblix team.

Join the Oblix Discord server →

Reliability By Design

Oblix incorporates intelligent agents that continuously monitor system conditions and network health, ensuring your AI applications remain responsive and functional even in challenging environments.