Smarter AI Inference with Oblix: A Deep Dive into Agentic Orchestration

Smarter AI Inference with Oblix: A Deep Dive into Agentic Orchestration

Modern AI applications demand fast, reliable, and cost-efficient inference pipelines across diverse environments like edge devices, desktop platforms, and the cloud. Enter Oblix, a lightweight AI orchestration framework purpose-built for agent-driven routing of model workloads.

In this blog, we'll unpack the architecture, the real-time decision-making power of agents, and how Oblix brings production-grade orchestration to any AI developer's toolkit.

Why Orchestration Matters in AI

As foundation models grow in size and capability, developers are increasingly deploying across a hybrid stack:

- Local Execution: Smaller models running locally for privacy and speed (e.g., Mistral, Gemma)

- Cloud APIs: More powerful services (e.g., OpenAI, Claude) when local compute is constrained

- Dynamic Adaptation: Intelligent routing based on network, memory, and battery conditions

Most current solutions force developers to choose one approach or manually implement complex fallback logic. This creates unnecessary technical debt and brittle user experiences.

Oblix makes intelligent routing automatic — through agents and policies.

Oblix Architecture: Agentic by Design

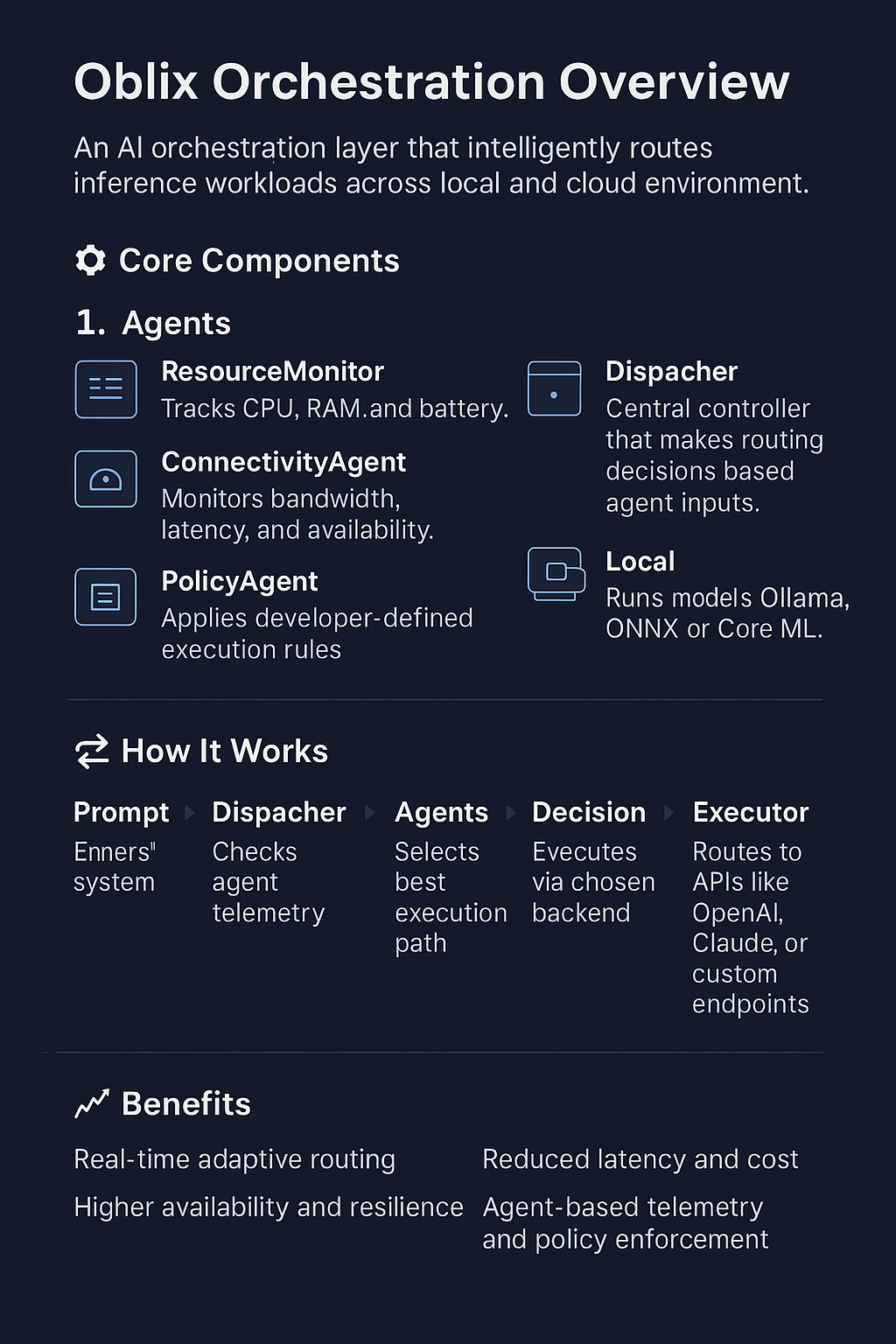

At its core, Oblix is composed of three primary components that work in concert to deliver seamless model orchestration:

Oblix's agentic architecture intelligently routes AI workloads based on real-time system telemetry

1. Intelligent Agents

Agents act as autonomous, lightweight modules that constantly observe system context:

- ResourceMonitor: Tracks CPU usage, RAM availability, and battery level to determine if local execution is feasible

- ConnectivityAgent: Monitors network quality and latency to assess if cloud services are accessible and responsive

- PolicyAgent: Applies developer-defined rules to influence execution paths based on business requirements

Each agent reports telemetry to the central dispatcher, enabling real-time routing decisions that adapt to changing conditions.

2. Central Dispatcher

The dispatcher serves as the decision-making engine at the heart of Oblix:

- Consumes telemetry data from all active agents

- Applies sophisticated routing algorithms to determine the optimal execution path

- Manages failover, retry logic, and graceful degradation

- Balances performance, cost, reliability, and data privacy requirements

This creates a zero-latency, plug-and-play routing layer that shields developers from the complexities of multi-environment execution.

3. Versatile Executors

Executors implement the actual model inference across different environments:

- Local Execution: Run models using Ollama, Core ML, or ONNX Runtime with no external dependencies

- Cloud Execution: Seamlessly dispatch requests to OpenAI, Anthropic, or custom endpoints

- Consistent Interface: Same API regardless of where the model runs

How Oblix Works in Practice

When a developer sends a prompt through Oblix, a sophisticated orchestration sequence activates:

- The prompt enters the system and is intercepted by the dispatcher

- All agents report current telemetry (resource availability, connectivity status, policy constraints)

- The PolicyAgent applies business rules to filter available execution paths

- The optimal executor is selected based on the current context

- The model runs in the selected environment

- Results are returned to the application with detailed execution metrics

This entire process happens within milliseconds, giving developers the benefits of sophisticated orchestration without performance penalties.

Real-World Example: AI Customer Support Bot

Consider building a customer support agent that needs to operate reliably under various conditions:

- Uses Mistral locally via Ollama for 90% of standard queries

- Falls back to Claude or GPT-4 for complex troubleshooting scenarios

- Dynamically reroutes based on CPU spikes, poor WiFi, or battery constraints

- Maintains consistent user experience regardless of which model handles the request

Without Oblix, implementing this robustly would require hundreds of lines of complex logic. With Oblix, this sophisticated behavior is achieved with minimal code and zero manual intervention.

Extensibility: Build Your Own Agents

One of Oblix's key strengths is its modular design and extensibility:

- Custom Agents: Create specialized agents for your unique infrastructure (e.g., GeolocationAgent for regional compliance)

- Tailored Policies: Write custom PolicyAgent logic to implement business rules (e.g., prioritizing low-power modes on mobile)

- Advanced Routing: Create sophisticated fallback chains or tiered executor priorities to handle specialized use cases

Benefits for AI Developers

Oblix delivers tangible advantages for teams building AI-powered applications:

- Enhanced Reliability: Applications continue functioning even when connectivity is limited

- Optimized Costs: Intelligent routing to local models when appropriate to minimize cloud API expenses

- Improved Performance: Adaptive execution based on real-time system conditions

- Developer Productivity: Eliminate complex routing logic and focus on core application features

- Future-Proof Design: Easily integrate new models and providers as they emerge

Getting Started with Oblix

Ready to try Oblix for your next AI project? Visit our comprehensive documentation to:

- Install the Oblix SDK

- Configure your telemetry agents

- Register local and cloud model executors

- Define custom orchestration policies

- Build resilient AI applications with intelligent routing

Conclusion

Oblix represents a new paradigm in LLM orchestration: agentic, context-aware, and developer-first. By bringing the dynamic behavior of autonomous agents to the inference layer, Oblix gives your AI applications the adaptability they need to thrive in production environments with diverse operating conditions.

Start building smarter, more resilient AI systems today — with Oblix.

Join Our Discord Community!

Have questions about agentic orchestration? Want to connect with other developers using Oblix? Join our thriving Discord community where you can get help, share your projects, and collaborate with the Oblix team.

Join the Oblix Discord server →

About Oblix

Oblix is an AI orchestration SDK that seamlessly routes between local and cloud models based on connectivity and system resources. It provides a unified interface for AI model execution, making your applications more resilient, cost-effective, and privacy-conscious.